Proud to share that our AI activities have been recognized with the official Seal of Innovation Competence. The BSFZ seal is awarded on behalf of the Federal Ministry of Research, Technology and Space by the Certification Office for Research Allowance (BSFZ).

This recognition confirms that our AI projects are not only innovative and future-oriented, but also comply with the high standards set by the research funding program. It is an official acknowledgment of our research excellence and our contributions to technological progress in Germany.

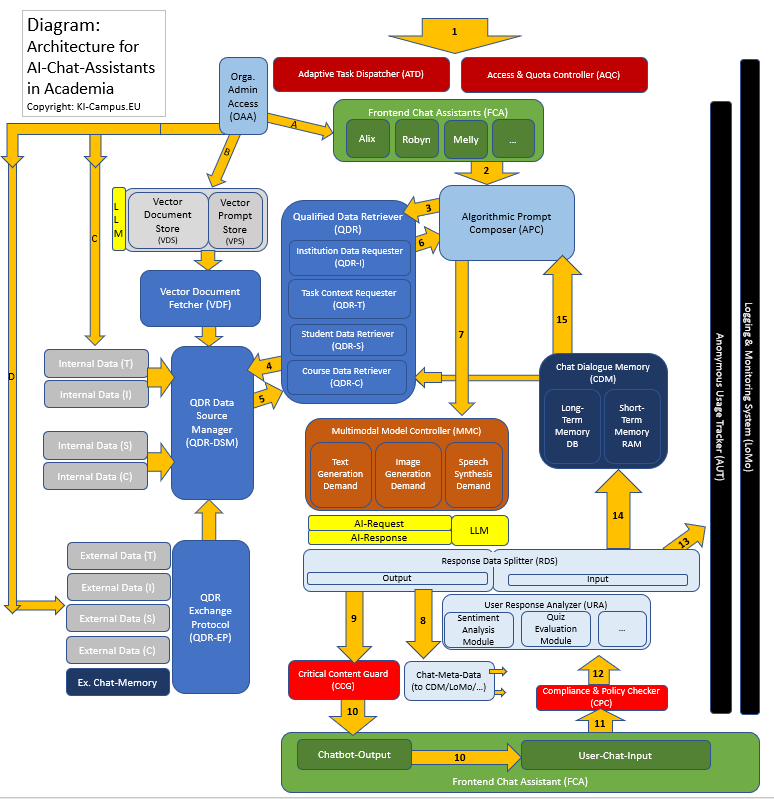

A key aspect being honored is our approach of “combining generative AI with higher education context data to create a new functional level of personalized educational assistance.” While some of this remains a vision under development, it is already becoming tangible through our projects. In other words: we connect state-of-the-art AI technologies with the real needs of study and campus management — today and tomorrow.

For us, the seal is both recognition and motivation to continue: bringing AI into higher education in a practical, responsible, and impactful way.