What legal risks do universities face with chatbots? Is there a risk of a chatbot’s wrong answer leading to legal issues? Absolutely! In a 2022 experiment with thousands of students, we observerd that roughly 10% of chatbot responses could have posed legal problems for the university. For example, if a student asks, ‘I am ill. Do I need to send a doctor’s certificate?’ and the official chatbot incorrectly responds, ‘No, just stay at home and get well,’ the university could face legal issues if the student is later de-registered for not submitting the certificate.

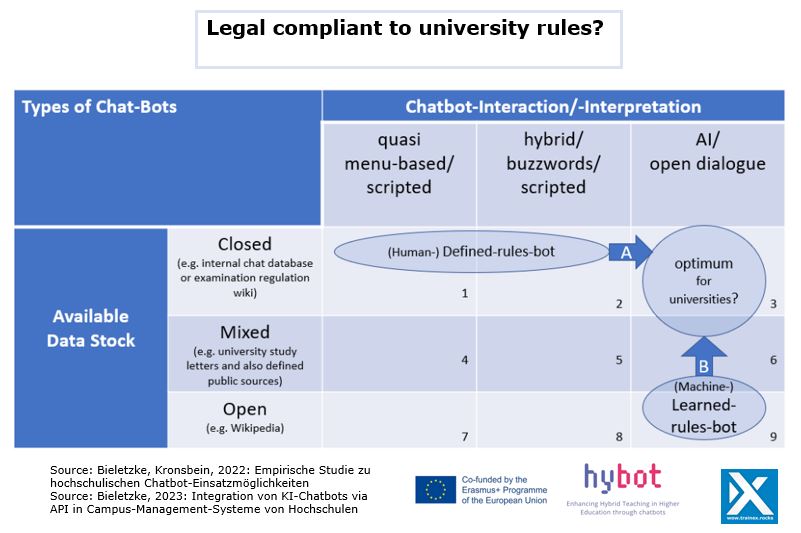

Are scripted chatbots a solution? Pro: Scripted chatbots have a low probability of giving wrong answers. They always follow set rules and typically use a limited database, making them generally safe. (This is Position 1 in our matrix.) However, interacting with scripted chatbots can be unsatisfying because they struggle to understand the user’s intent or to respond to unforeseen questions. In SMARTA, we didn’t choose to improve scripted bots (Development-path A).

In Position 9 of the matrix is an AI-chatbot like ChatGPT. These chatbots understand user questions and respond in fluent sentences, offering a high level of user satisfaction. However, the risk of legal issues is greater due to the data they use. For instance, the chatbot might not know a specific university’s examination regulations but might reference another university’s regulations from its general training data. Therefore, we need to both limit (e.g., ‘do not hallucinate faculty staff’) and expand (e.g., ‘use these specific examination regulations’) the data used by the chatbot, which is Development-path B. At SMARTA, we are pursuing this path to control the input, processing, and output of the chatbot.